Introduction

If you have ever wanted to run your own large language model (Other name for generative AI) on your laptop or computer, you are at the right place. We will be taking a look at the simplest way we can run AI models on our personal hardware. Along with this, I will try to make this guide engaging and simple so most of you can follow it with ease.

There are a number of advantages of running your own local LLM on your PC and certain limitations as well. The first thing to keep in mind is that there are numerous ways to run our own AI and we will be taking a look at one of them. This is done to ensure the guide does not become too technical and remains an easy to follow walkthrough.

Without further ado, let’s get right into it and learn all about setting up our own personal Local LLM.

Setup Guide for Enthusiasts

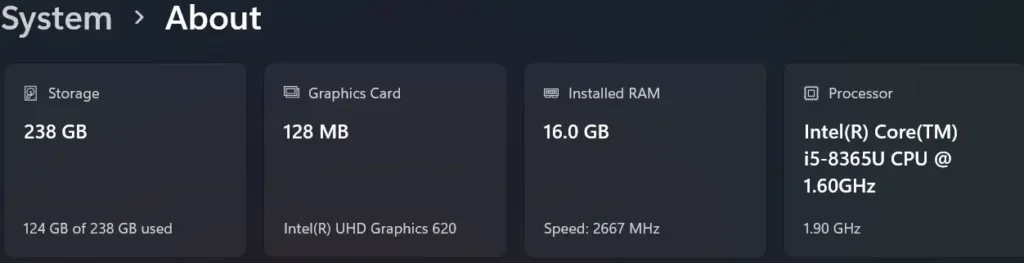

Before we begin, there are a few key considerations we have to keep in mind. The models you choose to use should fit in your GPU or RAM. If you do not have a graphics card, you can still run AI models if it fits inside your RAM but the speed would be a lot slower. Avoid using models which are too big for your hardware else the experience would not be great.

Hardware Requirements

There are a lot of different AI models which vary a lot in size and the larger the model, the more hardware requirements would be there. Suppose you have a PC with 16 GBs of RAM and a GPU with 6 GBs of RAM. Then, the models you could run should be under 10 billion parameters with a 4 bit quantization.

In simple terms, quantization is the process which makes it possible to run larger models with minimal performance loss on consumer hardware. There are different types of quantization available for different models. The most popular one is GGUF quantization.

Installing an AI Text-Gen Software

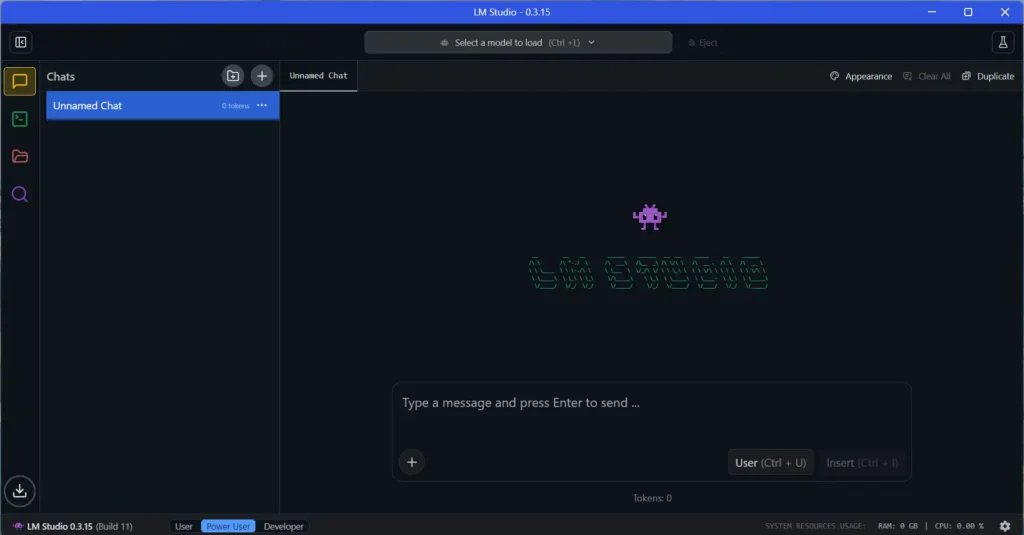

In this step, we would be installing the necessary software to run LLM on our PC. There are a ton of different software stacks available for running LLM locally like Msty, Kobold, and LM Studio. I will be using LM Studio because it is the one I am most familiar with (You can use others after getting more familiar with Local LLM models).

LM Studio is also quite easy to run and requires almost zero configuration from the user’s end. You can download LM Studio by visiting their official site. After downloading, run the installer for LM Studio and follow the steps to have a full installation of LM Studio on your device.

Source to Download AI Models

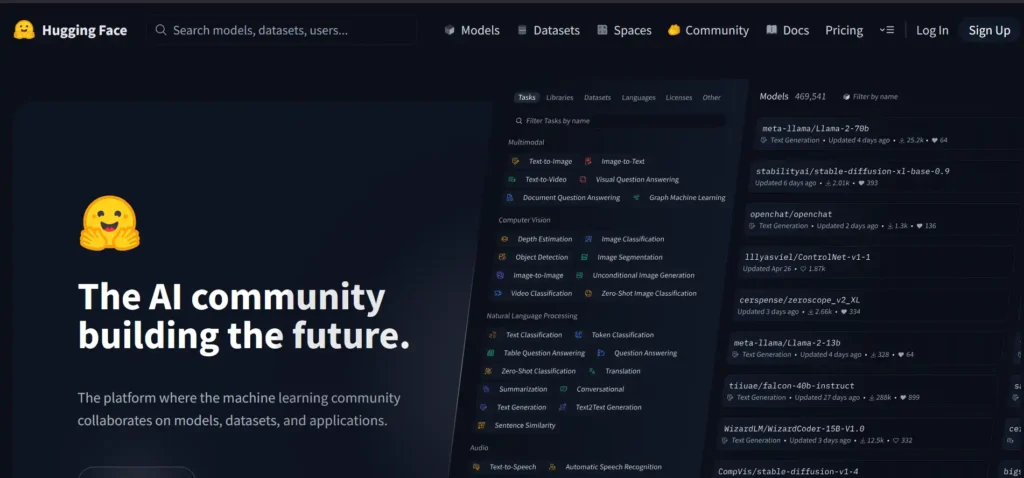

After installation of the software stack, we now have to find a place to download models. Hugging Face is the recommended place where all the big companies upload their models for the users to download. If it sounds complicated, do not worry since LM Studio has a built in link to Hugging Face.

This allows us to directly download models on your drive from the application. There are numerous good models released by Google, Meta, Mistral, Microsoft and others. These models range from 1 billion parameters to over 100 billion parameters (Generally, the larger models will be better but require more hardware resources to run).

Choosing a Large Language Model

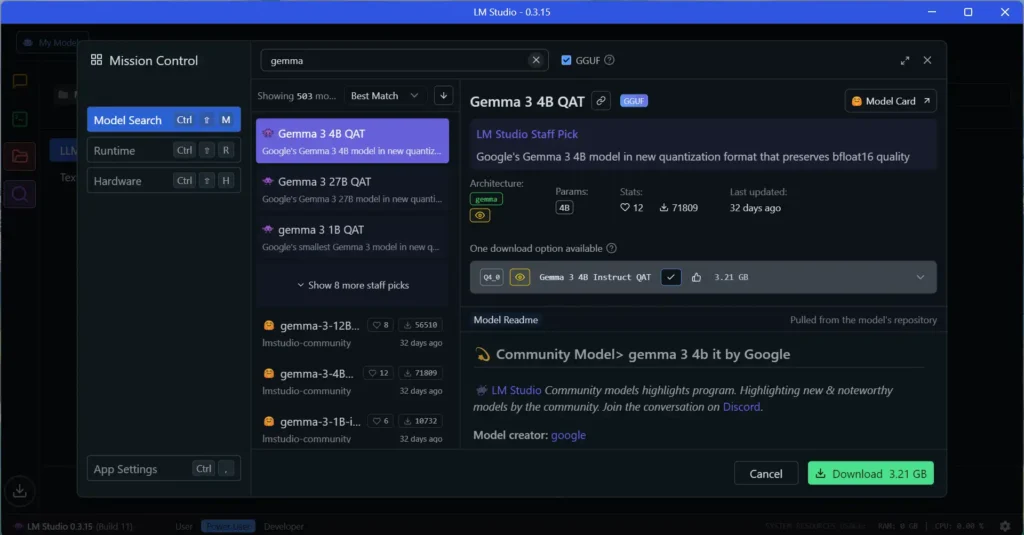

For this article, I have used my old Dell laptop without a GPU with just 16 GB of RAM. The model I have selected is Google Gemma 3 4B QAT (which is around 3 GBs in size). This was done to demonstrate that running LLM is possible for most hardware configurations.

You can search for the model in LM Studio’s search bar and you will find a link to download this quantized model by LM Studio Community page. Find the right model and click on download. Now, just wait for it to finish downloading so we can move on to running our personal LLM on our PC/Laptop.

Running your Personal AI

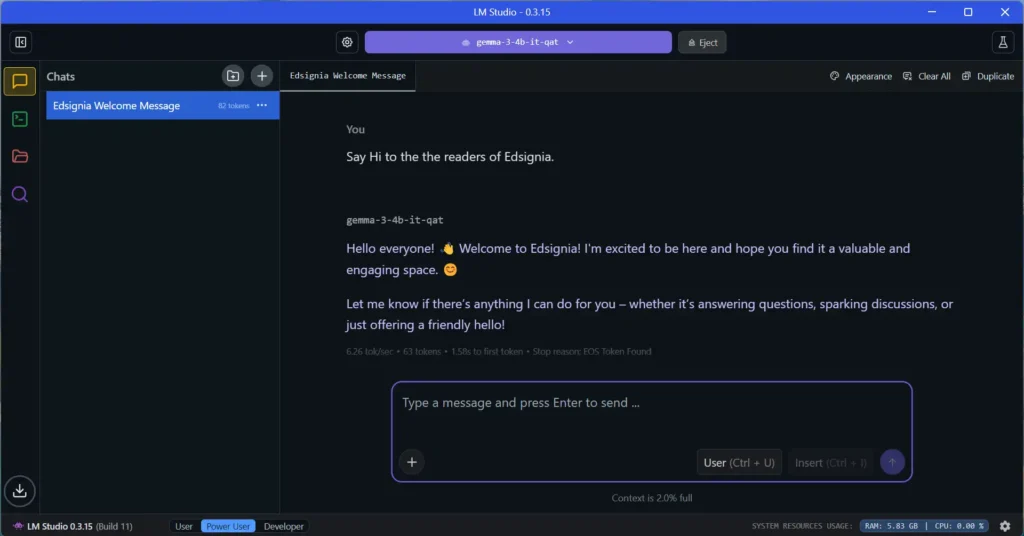

After the downloading is completed, you will find the model in your LM Studio’s drop down menu. Select the model and it will automatically decide how many layers it needs to offload to GPU (in this case, I am running it on CPU only). Now, load the model and when the model is fully loaded in the RAM, you can now begin asking questions.

I have attached a sample of a chat between me and the AI model I am running on my laptop (for being a small model, it is actually quite smart). There are many different models of different sizes. You are free to choose any large language model you want to run on your computer.

Pros of Running a Local LLM

Now, a question may pop up in your mind which would be completely justified, which is “Why would anyone run AI on their computer?”. There are numerous different advantages of doing so and there is a whole large community on reddit centered around this hobby. Let us take a look at what draws so many people towards Local LLM in the first place.

Free

Arguably, the biggest advantage of running AI models on your own hardware is that it is completely free. You do not even need to have a high spec PC for just trying out AI locally. As I just ran a very small yet powerful AI model on my Dell Latitude laptop which does not even have a graphics card.

It would definitely be no match to the large players such as Google Gemini or OpenAI ChatGPT (not even close) but it does prove something. For a personal hobby project where you just want to tinker with Local LLM, it is totally possible to do so. The biggest advantage is that you do not need to spend a dime for learning and using large language models locally.

Runs Completely Offline

There is another audience which uses local AI on a regular basis. I am talking about the privacy conscious folks who want their chats with their AI to stay on their own hardware. The reasons for such use cases could be many, ranging from the need for 24/7 offline access to storage of data on their personal computers.

Regardless of the reason, offline usage is something which is a great thing for running AI models. Suppose the WiFi is under maintenance and you need to learn about “Good practices for giving a presentation”. In such a case, you can take the help of Local LLM on your laptop.

Choice of Model Selection

As I previously mentioned, there are a number of different models available on Hugging Face. Depending on your hardware availability, you can pick any one which meets your needs. This large catalogue draws a lot of people into tinkering and playing around with LLMs locally.

Since there is no GPU on my laptop, I opted for a small yet powerful AI model. It achieved all the different objectives I had in mind for this piece I am writing. It helped me in demonstrating how to install LLMs locally and allowed people with limited hardware to try it out as well.

Choice of Software Selection

For this article, I used LM Studio for demonstration purposes. However, you are not just limited to one piece of software for trying out AI on your own device. There are a number of different software stacks which make running LLM on your computers possible.

Some of the famous ones are: Msty, Kobold, and JanAI. I picked LM Studio for making this guide but after you get familiar with local AI, you can choose to give others a try. There is no vendor lock-in for trying out Local LLMs on your own hardware which is a major pro for some users.

Portability

It may come as a shock but it is entirely possible to carry your own models on a personal harddrive with you. You can keep your favourite LLMs on an external hard disk if you do not have much space on your laptop. You just need to point your LM Studio installation to the location where your AI models are stored.

It will now detect the models and list all of them in your application interface. For many of you, portability might be a factor and it is totally possible with Local LLMs. For a hobby project like Local AI, portability is a nice feature and having it makes it a lucrative hobby for AI enthusiasts.

Cons of Running a Local LLM

Like all the good things, there are a number of cons of running a LLM on your computer. I wish there were all pros and no cons but that is not the case for anything in life. Let us look at some of the major disadvantages of Local LLMs which make people stick to online services for fulfilling their AI needs.

Cost of Hardware

We did show you that it is possible to run AI on most low end hardware but there is a small catch. For running those large models (which the first letter of LLM stands for) requires a high end PC. For simple tasks, small AI models are good enough, but they pale in comparison to their bigger cousins.

Running large models on your PC is possible, but it requires an upfront investment. For many of us (including me), a hobby is not worth the high costs associated with it. As a result, a large chunk of people refrain from picking Local AI as a hobby.

Requires Technical Skills

I tried making this guide as simple and easy to follow as I could. People who are already interested in the concept of running AI at home will find it doable. However, a large chunk of the general population will feel overwhelmed by the technicalities associated with it.

Due to this, Local AI will only cater to the tech savvy audience. Some folks will not pick it up as a hobby due to a number of different reasons. The most common problem would be the steep learning curve which is the major part of running large language models on our computers.

Needs Lots of Storage

The model I used for this article was a small quantized variant of Gemma 3 4B which was about 3 GBs in size. Large language models can go over 100s of GBs in size which becomes a great challenge for anyone with limited storage. Most Local LLM enthusiasts keep different AI models at the same time on their device which takes up a lot of storage space.

For many of us, we cannot allot hundreds of gigabytes (or possibly even terabytes) for a single hobby. Running Local LLMs is a storage consuming hobby which deters a lot of potential enthusiasts from it. Buying a larger storage may not make sense for the general populace as a whole which makes it a major con in running LLMs locally.

Performance Variability

In the introduction part, we glossed over how the bigger models would perform better than the smaller models in general. This is not a clear cut statement as many factors determine how an AI model would perform in real world usage. These factors range from training tokens, model architecture, quality of dataset, and a lot more.

Due to which, it is tough to estimate the exact performance of a model and compare it to a similar sized model. There are a wide range of different models available and if one were to compare them for their own use cases, it would take a lot of time. This variability in the performance makes it a lot less appealing for regular users who wanted to find a one size fits all model which the cloud providers like Google and OpenAI provides them.

Troubleshooting

When using AI models locally, there are a number of problems which may arise at any time. This has to be tackled by the user themselves and it can be a challenge for many. The problems may be a simple configuration issue, or a major technical issue.

Regardless of the problem, the solution has to be found by browsing forums and it has to be done by the user. After hitting one roadblock after another during troubleshooting, a user may choose to drop the Local LLM completely. As a result, one may be tempted to stick with online AI service providers due to the lack of troubleshooting involved.

Conclusion

If you are still reading, I hope we were successful in bringing your personal AI assistant to life on your own hardware. We covered the easiest method which could allow you to set up your own Local LLM on your personal computer. However, there are a lot of moving parts involved in running large language models locally which we briefly glossed over in this article.

There are a lot of elements of Local AI such as: various quantization techniques, dense models, mixture of experts (MOE) based models, etc. Do let us know if you want to read another in-depth article covering these topics in the comments down below. Also, feel free to provide us with your valuable feedback or future suggestions for new articles by visiting our contact page.

You can also take a look at the recent article we published on “How to Disable News Feed Widget in Windows 11“.Have a good day everyone and I hope to meet you soon with another informative piece.